Auto nom

Project Description

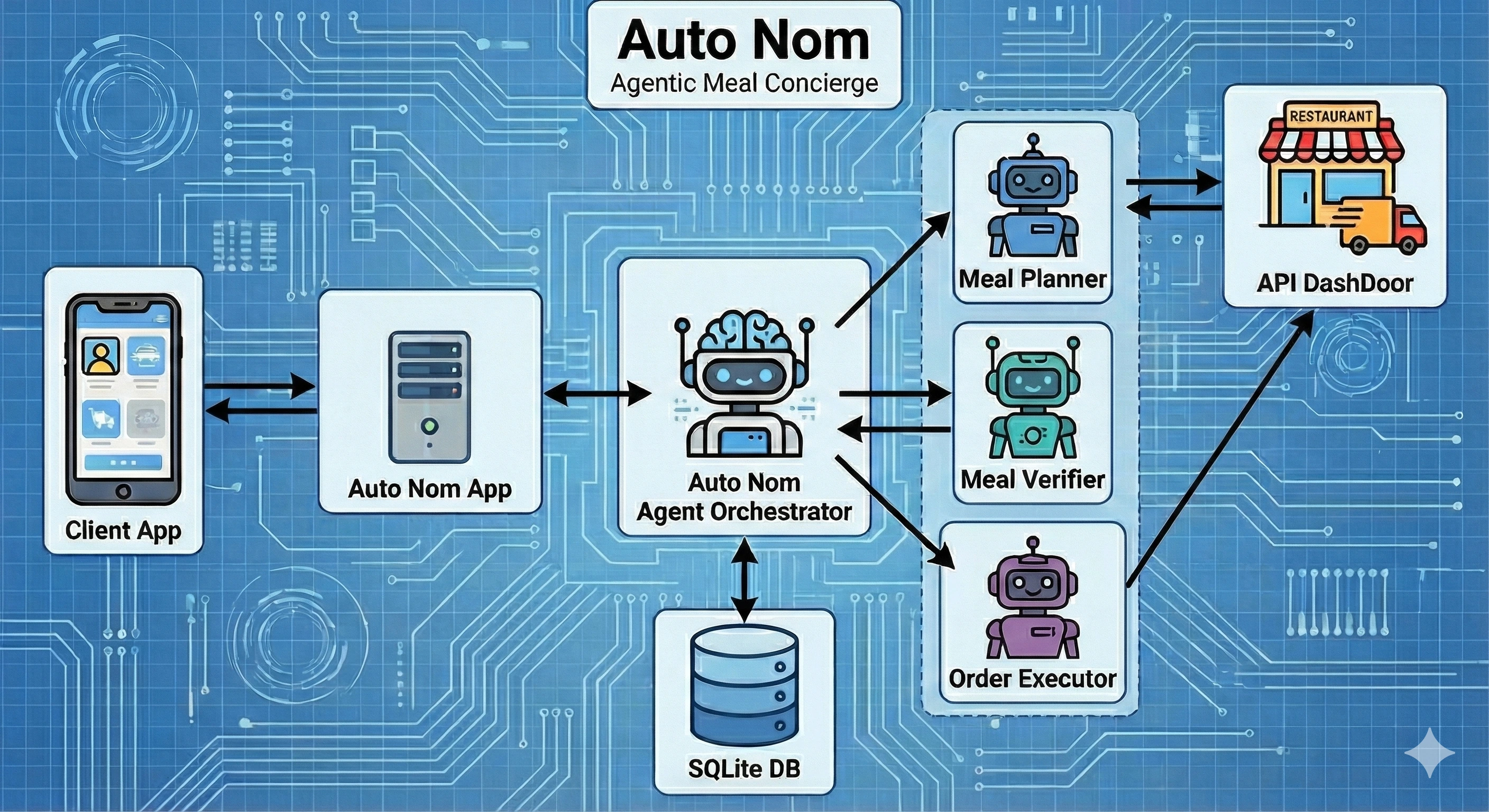

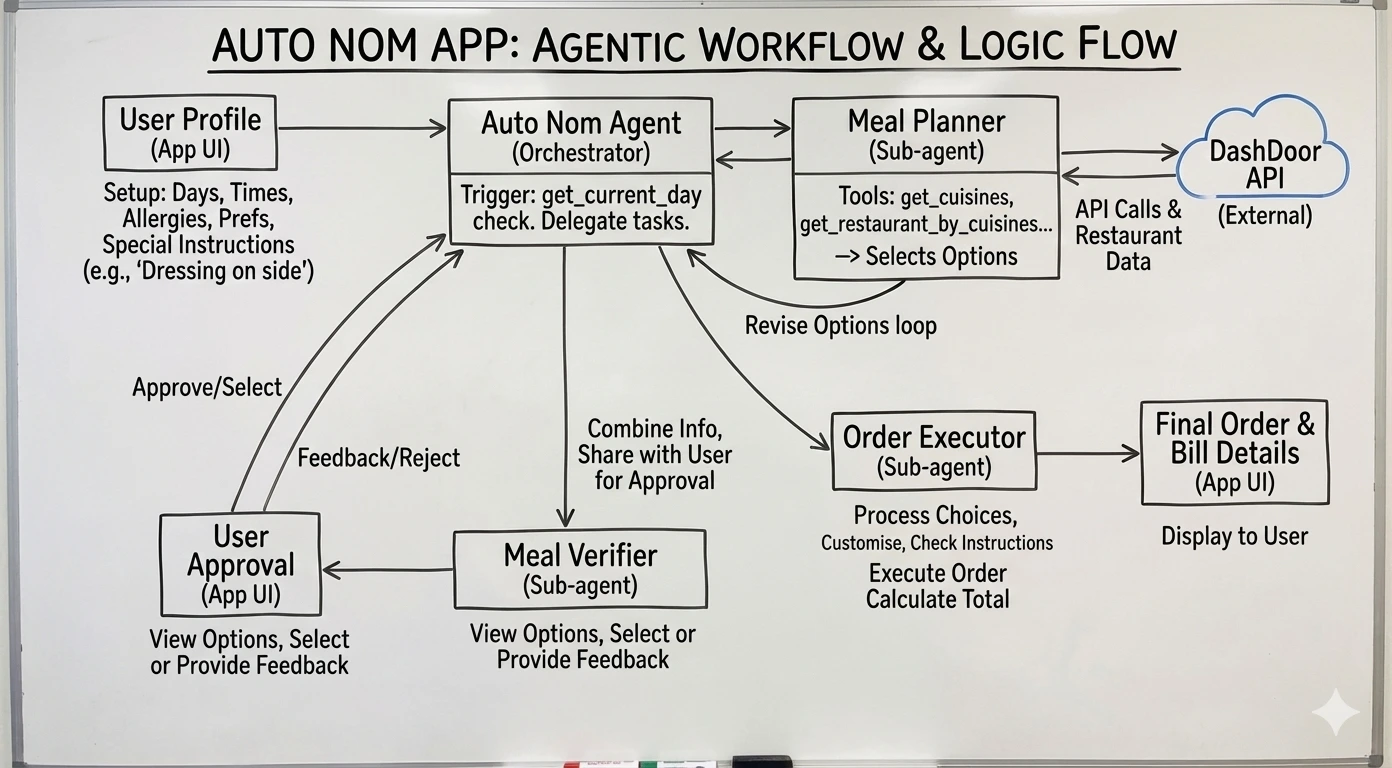

A production-grade 'Concierge Agent' that proactively manages the entire lifecycle of meal planning—from semantic discovery to final delivery—using a robust multi-agent architecture.

Responsibilities

- Architected a robust multi-agent system using Google ADK, implementing a Hub-and-Spoke pattern where a central Orchestrator manages specialized workers (Researcher, Verifier, Executor).

- Designed a deterministic Finite State Machine (FSM) backed by persistent SQLite storage to control non-deterministic LLM behavior, preventing infinite loops and ensuring reliable workflow transitions.

- Engineered a production-grade microservices environment using Docker Compose, orchestrating the Agent Runtime alongside a custom FastAPI mock service ('DashDoor') to simulate real-world API latency and data retrieval.

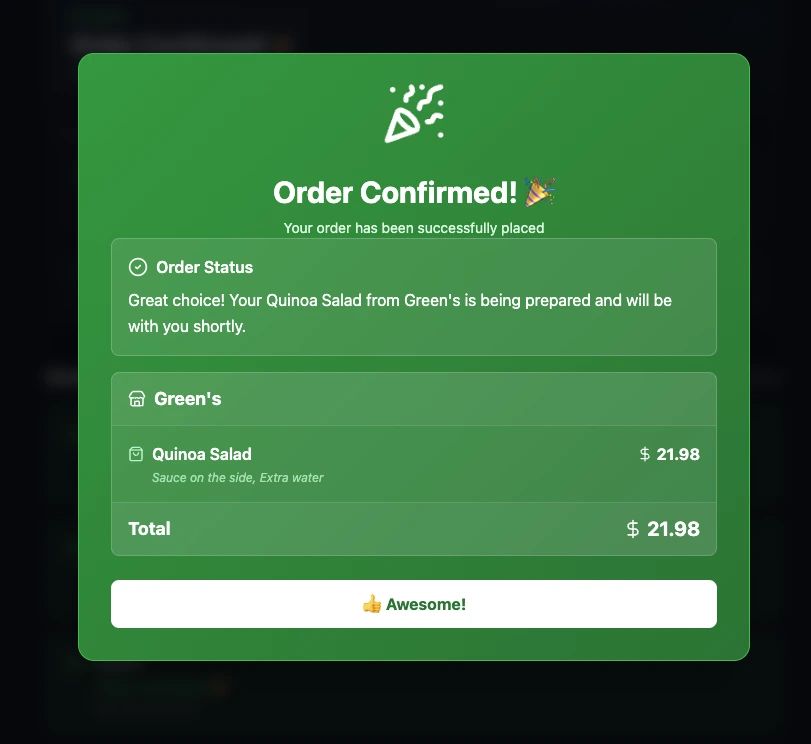

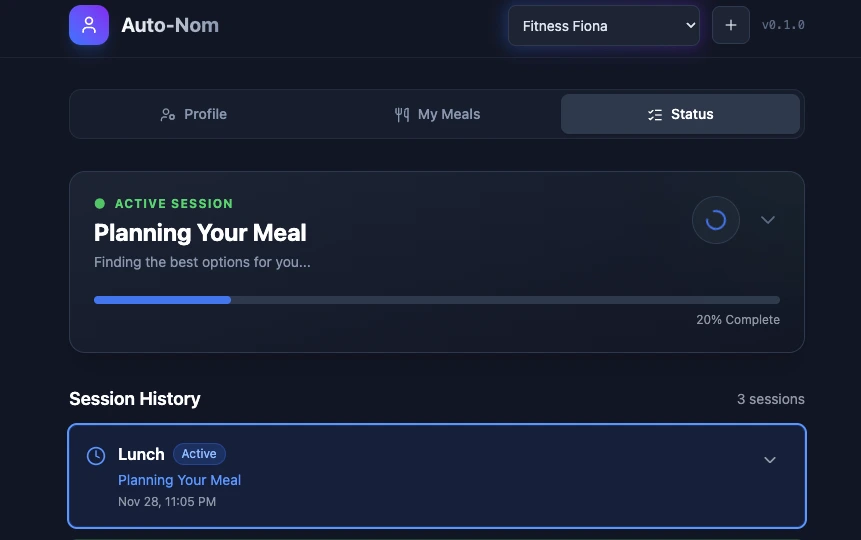

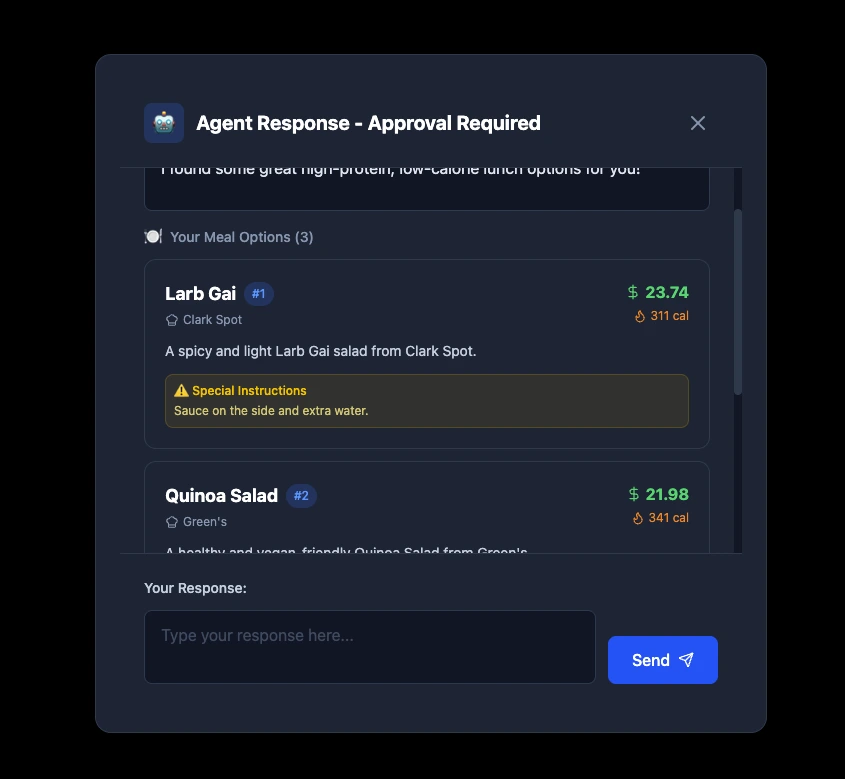

- Implemented a complex 'Human-in-the-Loop' pattern that pauses the agent's execution context during critical decision points and resumes it seamlessly via a React dashboard polling mechanism.

- Built a custom React + Vite frontend featuring a live 'Thought Stream' that visualizes the agent's internal reasoning, tool calls, and state changes in real-time using Server-Sent Events (SSE).

- Developed advanced semantic search capabilities, allowing the agent to interpret vague user constraints (e.g., 'Something greasy but NOT Indian') and map them to specific database filters.

- Solved complex constraint satisfaction problems (e.g., 'Group order, $150 budget, 50% Vegan') by leveraging the agent's reasoning capabilities to autonomously bundle items into a valid cart.

- Optimized token usage and latency by designing specialized tools with strict schemas, guiding the LLM to use efficient search filters instead of processing large datasets in-context.

Related Links

Technology

DockerFastAPIGoogle ADKPythonReactSQLite